Test case generation use case

Overview

The application is designed to automatically generate detailed test cases for the IBM Maximo Application Suite 9 (MAS 9) using a large language model from IBM Watsonx AI. Its primary purpose is to help testers rapidly produce comprehensive test cases based on provided functional requirements. Users can either send plain text descriptions or upload files (like DOCX or PDF) containing the details. The generated test cases are then formatted into a table and made available for download as an Excel file.

Key Functionalities

- Automated Test Case Generation

- Input: A functional document (for example, requirement specifications, user stories, or design documents).

- Process: The tool parses the document to understand the functionalities described and then generates a set of test cases based on that understanding.

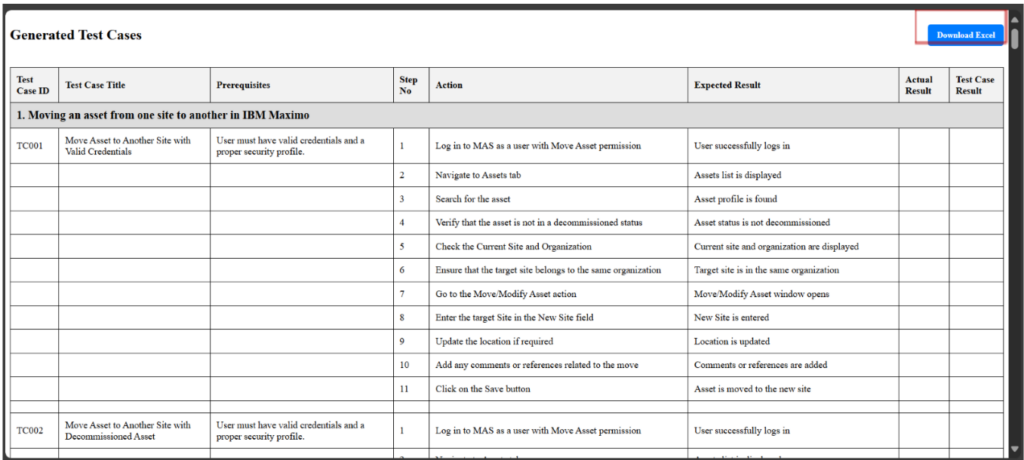

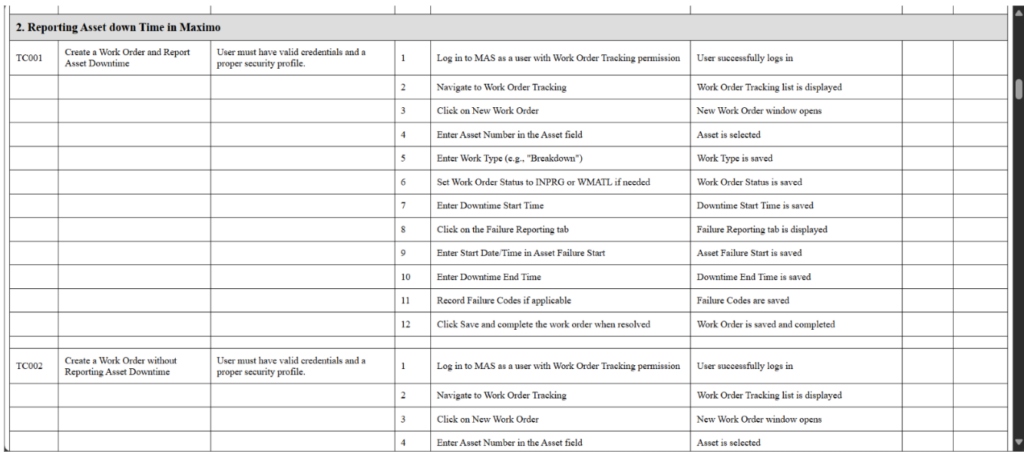

- Output: Test steps formatted in Excel. The Excel file may include columns for test scenario IDs, Test case title, Prerequisites, actions, expected outcomes, and sometimes even mapping to specific requirements.

- Excel as a Deliverable Format

- Validation-Friendly Format: Excel is widely used and accepted in many organizations for test case documentation. Its tabular format ensures that test cases can be reviewed, validated, and even edited by stakeholders without specialized software.

- Downstream Integration: Excel sheets can be imported into test management tools or Continuous Integration/Continuous Deployment (CI/CD) pipelines, making it easier to automate testing or generate reports.

Benefits for Developers

- Enhanced Requirement Clarity

- Traceability: When developers receive a set of test cases that are directly generated from the requirements, they can quickly map the functionality to its corresponding test. This creates a clear traceability matrix between the requirements and test cases.

- Early Feedback: Developers can review the generated test cases early in the development cycle. This helps spot any potential misinterpretations in the requirements, leading to early corrections before coding begins.

- Faster Debugging and Integration

- Self-Documentation: Auto-generated test cases serve as a kind of living documentation of the system’s behaviour. Developers can use these to understand the expected behaviour of a function quickly.

- Automation Assistance: They also provide a ready-made framework for writing automated tests. Developers can integrate the tests into their Continuous Integration setup, meaning each change is verified against a set of predefined, comprehensive test scenarios.

- Improved Communication Between Teams

- Common Ground: When test cases are derived directly from the requirements, both developers and testers are on the same page. This minimizes the “interpretation gap” and leads to improved collaboration between the two groups.

Benefits for Testers

- Efficiency in Test Planning and Execution

- Time Savings: Automating the generation of test cases saves testers hours of manually writing each test case from scratch. This efficiency allows them to focus on higher-level testing (e.g., exploratory testing, performance, or security) where human insight is crucial.

- Consistency: The generated test cases follow a standard format and structure, ensuring consistency across test suites. This is particularly useful in large projects with numerous functionalities.

- Rapid Regression and Coverage Analysis

- Regressions: As new functionalities are added or existing ones updated, regenerated test cases ensure that testers have a full, updated set of scenarios. This is crucial when dealing with regression testing where past functionality needs to be re-verified.

- Coverage: Automated generation helps ensure that all scenarios mentioned in the functional document are covered. It reduces the risk of human error or oversight, which in turn increases the overall test coverage.

- Simplification of Test Data Management

- Excel Integration: Many testing teams use Excel as a tool for managing test data or recording manual testing results. Having test cases readily available in this format means they can easily update, annotate, and integrate these cases within existing workflows.

Real-Time Usage Scenarios

- Agile and Continuous Delivery Environments

- Sprint Planning: Prior to sprint execution, automated test case generators can quickly provide a suite of tests for all the functional documents planned for the sprint. This assists scrum teams in planning testing activities more effectively.

- CI/CD Pipelines: Once integrated with CI/CD tools, the automated tests provide immediate feedback on the impact of code changes. For example, as soon as a developer commits new code, the corresponding tests can be run automatically, ensuring that new functionality hasn’t broken the existing features.

- Regulatory and Compliance Domains

- Audit Trails: In industries where regulatory compliance is crucial (such as finance, healthcare, or aerospace), having a clear, standardized record of test cases mapped to requirements can serve as an audit trail. This documentation is useful during compliance reviews or external audits.

- Change Management: When changes occur due to regulatory updates, the test case generator can quickly produce new tests to ensure that software continues to meet legal and operational requirements.

- Cross-Functional Collaboration

- Unified Documentation: Both the development and QA teams can review the same set of test cases, ensuring a unified understanding of the expected system behaviour. This minimizes conflicts and miscommunications between teams.

- Client Demonstrations: In some cases, having a detailed set of test cases helps in client meetings or demos, showcasing that every piece of functionality is being verified as per the specification.

Summary

In summary, the application is a comprehensive test case generator that integrates an AI model to produce detailed test cases from functional requirements. It supports multiple input formats, uses robust parsing to organize the output, and offers both a web-based interface and file downloads for user convenience. The design emphasizes security, user accessibility, and ease of integration into testing workflows, making it a valuable tool for testers working with IBM Maximo Application Suite 9.

User Roles & Login

The MAS (Maximo application suite) supports role-based access aligned with Maximo’s permissions:

Roles:

- Premium user: A user with full access to critical applications, configurations, and administrative functions. Often equivalent to system administrators or power users who manage workflows, security, and integrations. Can create new security groups, modify workflows, and integrate with external systems.

- Base User: A standard business user with access to core applications for day-to-day operations. Focused on transactional tasks like creating work orders, managing assets, or processing inventory. Can create a work order but cannot approve it (requires PLANNER role).

- Limited User: A user with restricted access, often read-only or limited to specific tasks. Typically used for auditors, temporary staff, or roles requiring minimal interaction. Can view asset history but cannot edit fields.

- Self-Service User: A non-technical user who interacts with Maximo through simplified portals (e.g., Service Request Manager, Employee Center). Typically, external stakeholders like employees, vendors, or customers. Can submit a ticket via a portal but cannot log into the Maximo UI.

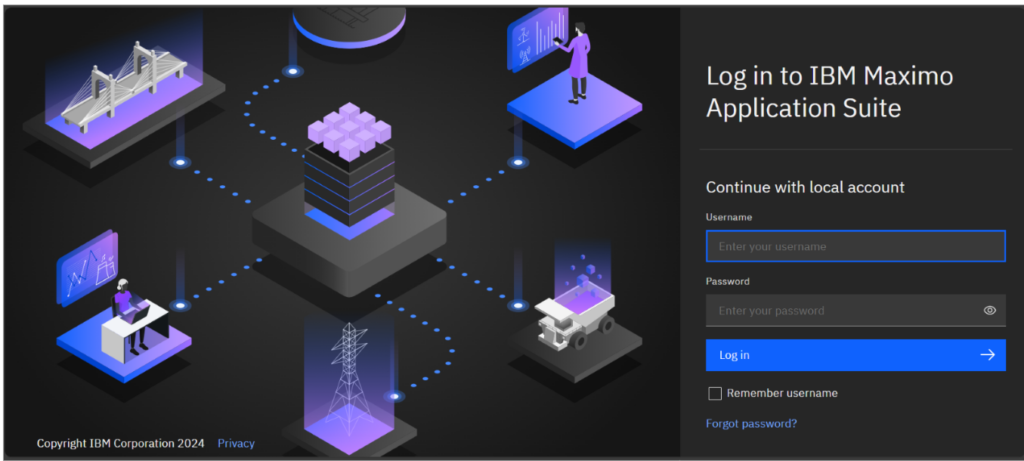

Login Process:

- Navigate to Maximo Application suite.

- Enter credentials:

- Username

- Password

- Optional: Show Password to verify input.

- Click Login to access the Maximo application with Watson X assistant.

- Use Forgot Password to reset credentials.

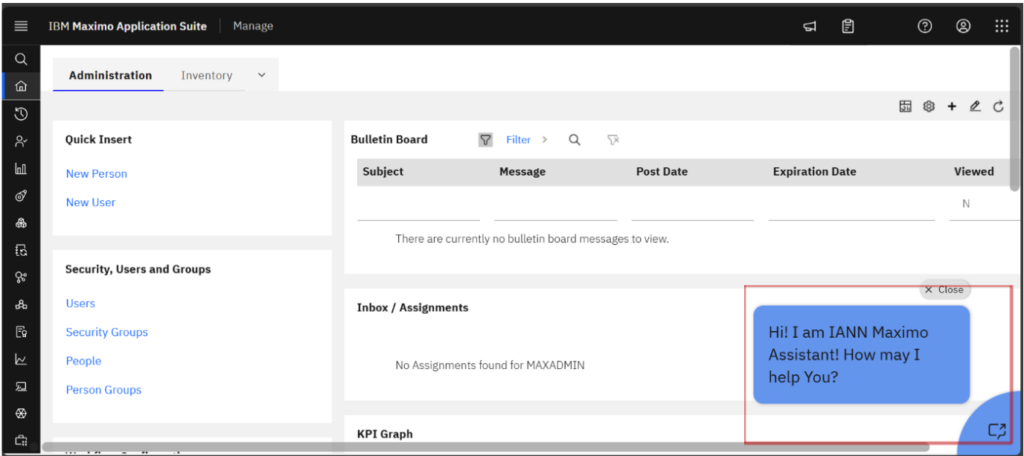

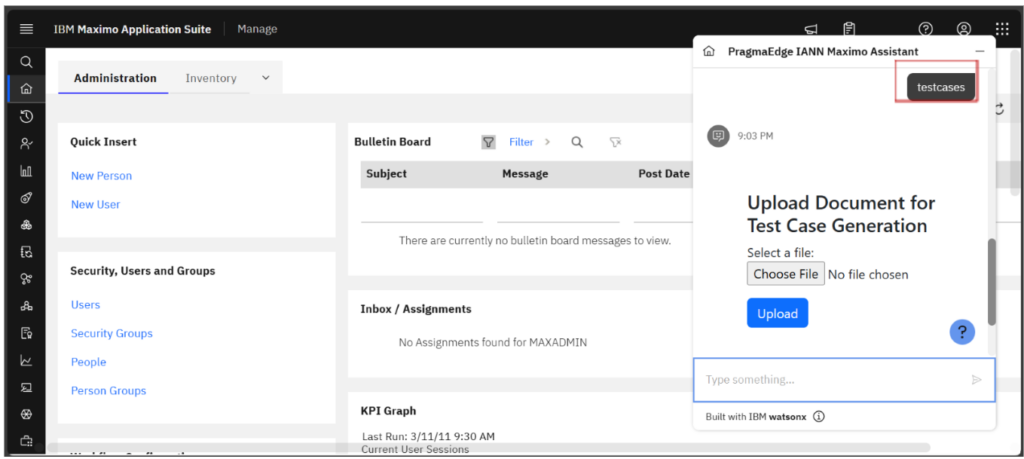

Launch the IANN Maximo Assistant by clicking on the assistant icon.

Initiate the process by clicking on ‘Testcases.’ Or Entering ‘Testcases’ in assistant.

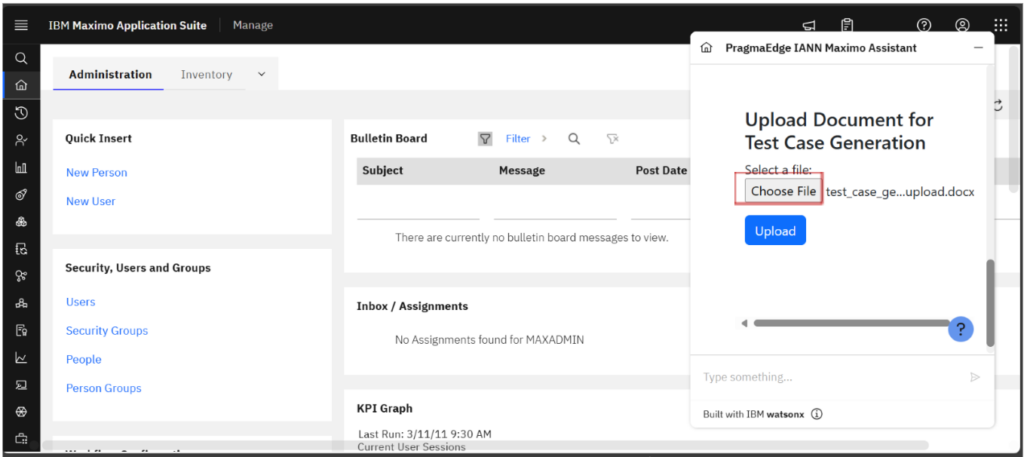

Initiate the file upload by selecting the ‘Choose File’ option in the assistant, which will open your local file explorer

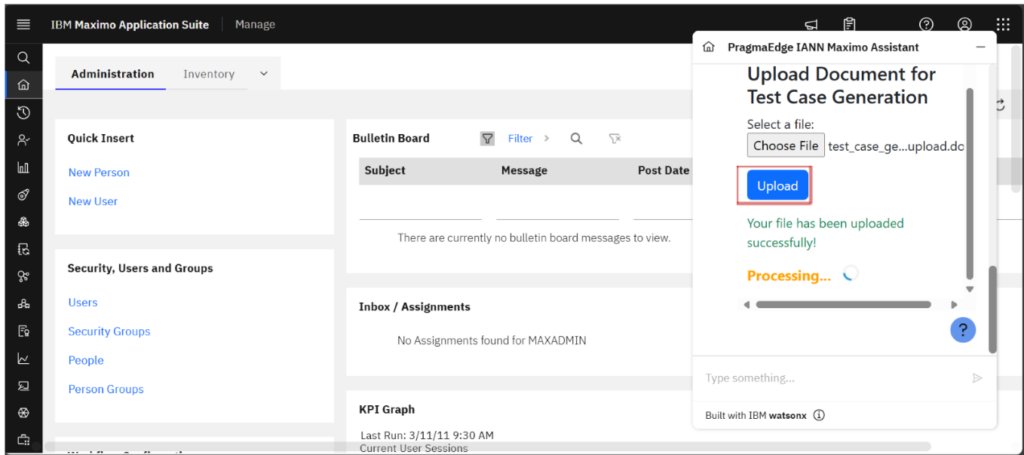

After choosing a file from your local storage, click on the Upload button to start processing the file and generating test cases. The processing status will be displayed in the image provided below.

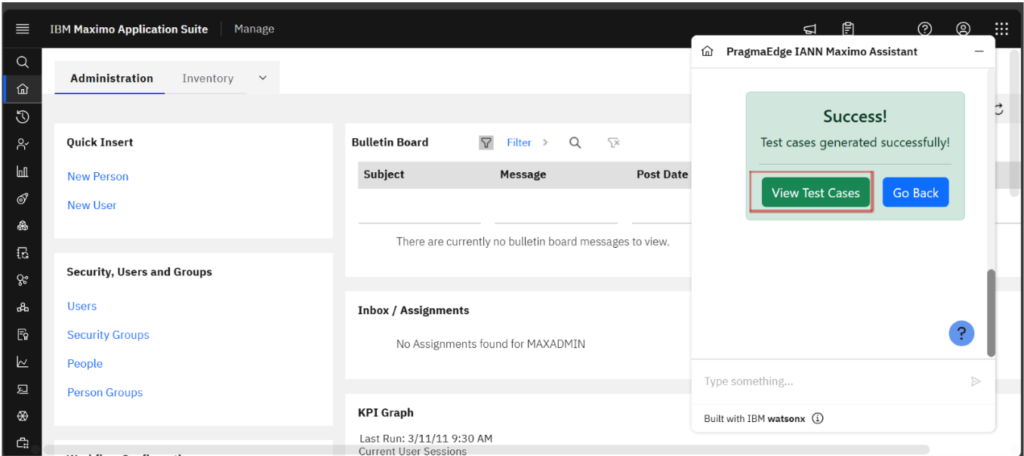

After the file has been processed successfully, a success message is displayed as depicted in the figure below. Two options are then available: View Test Cases and Go Back. Select view Test cases option to view testcases.

After selecting the View Test Cases option, the test cases associated with the provided functional document are displayed, as illustrated in the figures below. To download the Generated testcases file onto your device, simply choose the Download option.